Touchscreens on a Mac? What would Steve Jobs say?

A decade ago, critics warned us that the inevitable result of putting a touchscreen on a laptop would be "gorilla arm." My, how times have changed.

Image by DALL-E

Quick quiz: How fast is Steve Jobs spinning in his grave right now?

Mark Gurman, the Man Who Scoops Apple, is reporting that Apple is working feverishly to add touchscreen support to the MacBook Pro, just like an iPhone or iPad, with the first devices scheduled to arrive in 2025.

Well … that’s a sea change.

Before he died, Jobs famously dissed the notion of a touchscreen on a laptop. The Apple head honcho said touchscreen laptops would be "ergonomically terrible."

We've done tons of user testing on this, and it turns out it doesn't work. Touch surfaces don't want to be vertical.

It gives great demo but after a short period of time, you start to fatigue and after an extended period of time, your arm wants to fall off. it doesn't work, it's ergonomically terrible.

That was right around the time that Microsoft was beginning a massive push into touch-enabled laptops. And the tech press came down on Steve’s side, hard. I remember a characteristic column in the New York Times from David Pogue, who halfheartedly praised one Windows PC with the memorable line “It has enough jacks to stock a RadioShack.”

But the defining commentary on the subject came from Tim Carmody, who wrote this for Wired in 2010:

Why 'Gorilla Arm Syndrome' Rules Out Multitouch Notebook Displays

But Apple didn't have to do its own user testing. They didn't even have to look at the success or failure of existing touchscreens in the PC marketplace. Researchers have documented usability problems with vertical touch surfaces for decades.

"Gorilla arm" is a term engineers coined about 30 years ago to describe what happens when people try to use these interfaces for an extended period of time. It's the touchscreen equivalent of carpal-tunnel syndrome. According to the New Hacker's Dictionary, "the arm begins to feel sore, cramped and oversized -- the operator looks like a gorilla while using the touchscreen and feels like one afterwards."

Ah, there it is. Indeed, the idea that we would be forced to spend our working days tapping on a screen in front of us as we navigated through the workday … well, that was terrifying!

Pogue was even more emphatic in 2013, when he wrote “Why Touch Screens Will Not Take Over” for Scientific American.

Using a series of fluid, light finger taps and swipes across the screen on a PC running Windows 8, you can open programs, flip between them, navigate, adjust settings and split the screen between apps, among other functions. It's fresh, efficient and joyous to use—all on a touch-screen tablet.

But this, of course, is not some special touch-screen edition of Windows. This is the Windows. It's the operating system that Microsoft expects us to run on our tens of millions of everyday PCs. For screens that do not respond to touch, Microsoft has built in mouse and keyboard equivalents for each tap and swipe. Yet these methods are second-class citizens, meant to be a crutch during these transitional times—the phase after which, Microsoft bets, touch will finally have come to all computers.

At first, you might think, “Touch has been incredibly successful on our phones, tablets, airport kiosks and cash machines. Why not on our computers?”

I'll tell you why not: because of “gorilla arm.”

If those predictions had been right, those first touchscreen PCs would have been colossal failures. Sore-armed consumers would not be suckered into buying a second touchscreen-equipped PC and the entire category would have become a footnote in PC history.

But that’s not what happened.

Touchscreens arrived on iPads in 2010, and they started arriving on Windows 7 laptops shortly thereafter and then on Windows 8 PCs in a wave. I remember getting a call from a top exec at a leading PC OEM in 2009 asking if touch was really going to be a thing. “Yes, it is,” I recall telling him. “It will be years before it matters, but you better get started now.”

The early years of touch-enabled Windows PCs were messy. But Microsoft and their OEM partners managed to stay focused, and 15 or so years later your selection of touch-enabled Windows PCs is pretty impressive.

So, what changed? I reached out to Tim Carmody, who wrote that seminal Wired article, for an update. Here’s an edited transcript of our conversation:

Me: I am sure you saw the news (a Mark Gurman scoop, natch) that Apple is working on adding touchscreens to the Mac? That sent me on a trip to the Wayback Machine, where I found this article from you, predicting that multitouch notebook displays would never take off. Has your thinking evolved since that article?

Tim: I did see this, and was surprised, just because Apple has resisted putting touchscreens on the Mac for so long. I feel pretty good about a prediction that lasted 15 years. Never say never, but in computing terms, that’s a lifetime. I do still think there is an ergonomic issue that makes touchscreens unwieldy as a primary mode of interaction on a laptop.

Me: Indeed! Touch is terrible for Win32 Windows apps, or for classic MacOS apps, with their tiny touch targets. But web apps are often built with the assumption that people would use touch on a phone or iPad. And newer apps for both platforms are being developed with that philosophy.

Tim: Right. And almost all those web apps are now responsive, able to work on almost all platforms. We’re now more than 15 years into the touchscreen revolution, and people’s assumptions about computing interfaces have changed. The more people get accustomed to manipulating objects directly on the screen, and the more development time goes into making those interactions more powerful, the more that this kind of interface becomes desirable in other form factors.

Me: The Apple platforms have always been phenomenally conservative. They waited till the entire app landscape had caught up and now they will introduce touch across the product line without fear that they will be told "It doesn't work well with Acrobat 2009"...

Tim: There was a lot of speculation, after the iPad launched, that Apple was going to make everything look like the iPhone. And that really misunderstood what kind of company Apple is. Yes, they have had these breakthrough products, but they are really quite conservative.

That’s the difference between Microsoft and Apple, distilled. Microsoft has no problem releasing version 1.0 of a technology and waiting for the rest of the world to catch up. Which might or might not happen. Apple keeps those technologies in the lab, only releasing them as products when they’re confident they will quickly achieve success in the marketplace.

I have a theory about Apple’s long-term strategy. It starts with the initial work to bring iOS apps to the Mac, which is already done.

Any iPhone or iPad apps you purchase that work on your Mac with Apple silicon are shown when you view your purchased apps in the App Store.

When using one of these apps on your Mac, you can use touch alternatives to interact with the app—for example, press and hold the Option key to use a trackpad as a virtual touch screen.

Well, that’s awkward. You have an app running on your MacBook screen, and it looks exactly the same as it does on your iPad, but you have to use some funky combination of keyboard taps and touchpad gestures to use it? Isn’t that what David Pogue warned us about a decade ago? No wonder Apple doesn’t talk much about this capability.

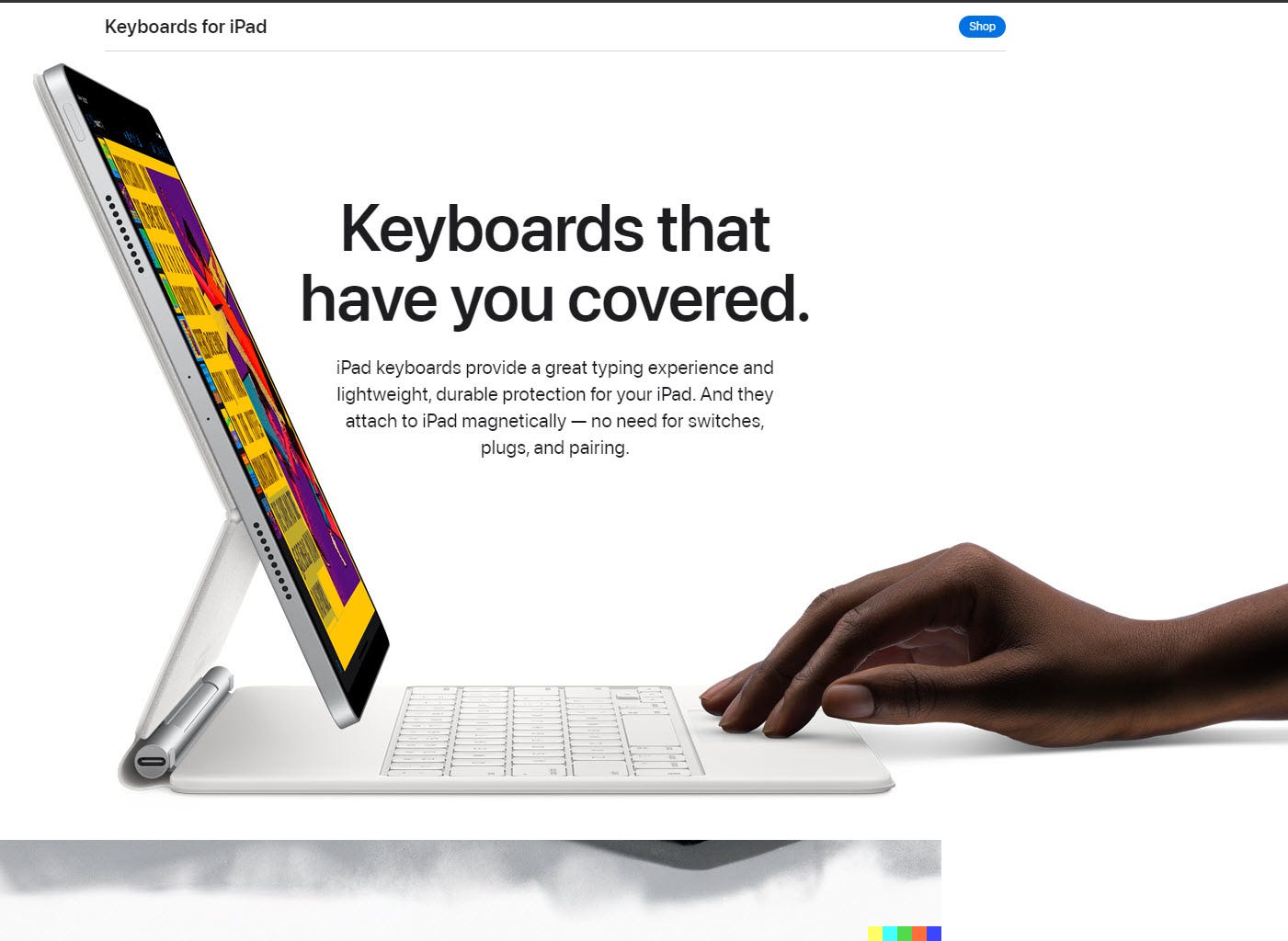

Over the years, Apple has steadily transformed its iPad, adding smart keyboards with trackpads and the option to connect a mouse. The end result looks an awful lot like a laptop, don’t you think?

Of course, an iPad isn’t really a laptop replacement; instead, it’s a device that can occasionally stand in for a laptop, so you don’t have to lug two devices in your travel bag. If the iPad-apps-on-Mac story is coming together, it means that a Mac can occasionally stand in for an iPad. And the huge inventory of iPad apps capable of running on a Mac will be the main focus of Apple’s touchscreen strategy for the Mac platform.

All of that is consistent with Steve’s original skepticism about touch on a laptop. But it’s also in keeping with the evolution of mainstream computing platforms, which have steadily added input modes through the years. In the beginning, there was the command line. Then came the mouse, followed by touchscreens and then by voice input.

So, what’s next? The holy grail of user interface is one that transforms your thoughts into actions, with no physical interaction required. I for one welcome our new AI overlords and really wish that ChatGPT could have written this post for me.

Thank you for reading Ed Bott's READ ME. This post is public so feel free to share it.